Agent TARS: ByteDance’s Open-Source UI Automation AI

Agent TARS caught my eye recently, and I couldn’t resist giving it a try. Built by ByteDance, it's an interface-based AI agent that claims to control your computer's UI – essentially aiming to do what tools like Manus offer. But this one's open-source and free.

What follows is my complete experience of installing, exploring, and testing Agent TARS what worked, what didn’t, and what you should know if you're curious to try it yourself.

What is Agent TARS?

Agent TARS is an open-source agent that aims to control your operating system by interacting with the screen just like a human would clicking, searching, typing, and more. Think of it like having a robotic assistant that can navigate your computer visually, opening apps or interacting with web pages.

This is not to be confused with UI TARS, an older version or related tool.

Versions

The source mentions distinct versions:

- UI Tars: An older version, specifically noted as version 0.9.

- Agent Tars: The newer version, indicated as 1.0 alpha 8 or later. The native Mac app available for installation is stated to be this 1.0 version.

Despite being called Agent Tars, the interface might still show "UIS". The native app version 1.0 is described as the one that can be installed without needing to build from source.

Overview: Agent TARS vs. UI TARS

| Feature | UI TARS | Agent TARS |

|---|---|---|

| App Version | v0.9 | v1.0+ (alpha) |

| Installation Type | Manual (older method) | Native App (for Mac) |

| System Access | Limited | Full computer access |

| Interface | Less polished | Improved UI & Dark Mode |

| Functionality | UI Detection | Web + Basic OS Features |

Key Features of Agent TARS

- Native App Support (Mac): Easy to install and use without building from source.

- Supports Multiple AI Models: Works with Hugging Face, DeepSeek, Claude, and more.

- Web Browser Automation: Can search and interact with websites using a headless browser.

- MCP Integration: Allows future customization and system memory management.

- Dark Mode UI: Visually cleaner and more user-friendly.

- Test Model Feature: Test AI response before executing commands.

- Remote Mode: Control capabilities extend beyond local machine.

Visual Examples and Demonstrations

Let me walk you through how it looks when put to work. In one example I saw:

- A static portrait image was paired with a short reference video.

- The reference video was shown in the corner, and right next to it was the animated result.

- What happened was amazing—the image replicated the exact expressions from the video, including a tilted face, blinks, and even slight eye shifts.

Here’s a brief summary of a few examples:

| Reference Video | Input Image | Animated Output |

|---|---|---|

| Woman smiling with tilted head | Static cartoon image | Cartoon smiling with same tilt |

| Man frowning and blinking | Selfie photo | Photo shows identical expressions |

| Anime character talking | Anime still image | Character mouth moves in sync |

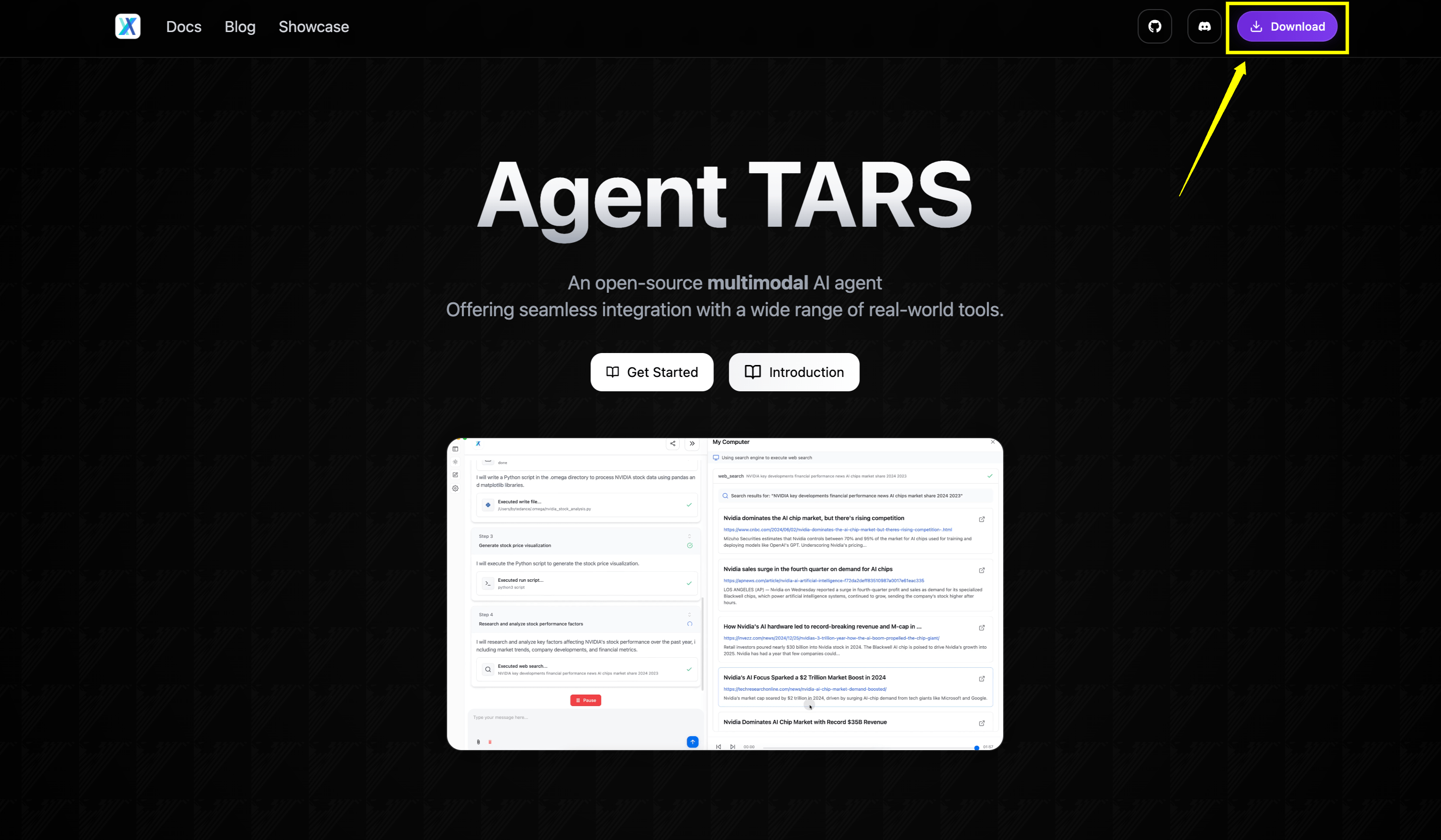

How to Get Started

Getting started with Agent Tars involves a few key steps. Here’s a simple walkthrough based on the latest information:

- Download: Obtain the application, ideally using the native app installer for easier setup. Make sure you download the correct, latest version (1.0 Agent Tars), as the initial download might be the older UI Tars (0.9).

- Image

- Grant Permissions: Enable the necessary system permissions so the agent can access your computer. This typically includes screen and audio access.

- Configure Model Provider: Open the settings and provide an API key for your chosen AI model provider. Supported options include VLLM, Hugging Face, Deepseek, or Claude.

- Deploy Model: If you select Hugging Face, you’ll need to deploy the chosen model as described in the documentation.

- Test Model Provider: The app allows you to test your configured model provider to ensure it’s working correctly.

- Select Search Provider: Choose a search provider. Local browser search is available as a free option.

- Initiate Tasks: Once everything is set up, you can start giving Agent Tars tasks. For example, you might ask it to navigate to a YouTube page and write a comment.

After setup, try experimenting with different tasks to see what Agent Tars can do!

First Tasks I Tried

After setup, I wanted to see if it could actually control things or just simulate browsing.

- Agent TARS opened a headless browser.

- It searched for “Tech Friend YouTube”.

- It attempted to interact with the video.

- It found a video, but not my latest one.

- It couldn't comment due to lack of login/authentication in the headless browser.

- Searching on Google

- Clicking elements

- Navigating tabs

I asked Agent TARS:

“Can you open VS Code?”

It tried to do so via the terminal, but failed to locate the code command. I then asked:

“Can you open Notepad?”

Response: “I can't control your operating system.”

This was the moment I realized it doesn’t yet have true UI-level control over apps installed locally, at least not without proper MCP integration or configuration.

Comparing Agent Tars to Other Tools

Agent Tars is best described as an open-source Manus clone. When comparing Agent Tars to Manus, there are several key differences to consider:

- Scalability: Manus is designed to be more scalable, supporting larger workloads and more users.

- Security: Manus runs all operations inside a virtual machine (VM), providing complete isolation and security. In contrast, Agent Tars runs directly on your local machine, which could present security concerns.

- Parallelism: Manus operates in the cloud and can launch multiple VMs to perform tasks in parallel. Agent Tars, running locally, does not offer this level of parallelism.

- Cost: Agent Tars is open-source and free to download. If you use it with a cost-effective model like Deepseek V3, running Agent Tars is expected to be extremely inexpensive—just a few cents per use.

Using Different Models

I tried using DeepSeek V3, which is cheap and worked fairly well. Here's a comparison:

| Model | Cost | Quality | Response Time |

|---|---|---|---|

| DeepSeek | Low | Decent | Fast |

| Claude | High | Very Smart | Moderate |

| HuggingFace | Medium | Depends on model | Varies |

You can test any of these via the built-in test tool before activating them.

Limitations and Observed Issues

Based on the testing performed in the source, several limitations were encountered:

- Lack of Operating System Control: Despite the stated goal, the agent did not appear capable of controlling the operating system through the user interface. It explicitly stated it could not perform this action.

- Headless Browser Session Issues: The use of a headless browser means the agent does not retain authentication sessions. This prevents it from performing actions that require being logged in, such as commenting on videos.

- Model Performance: The Deepseek V3 model, while cheap, was initially thought to be "too dumb" for the task attempted, though it later performed planning steps.

- API Key Requirement: Setting up requires providing API keys for model providers and potentially search providers (unless using local browser search). Obtaining API keys can be a step that is "not very norm".

- Security Concerns: Agent Tars runs on the local computer. This raises potential security issues compared to solutions that run entirely within a virtual machine.

- Scalability: It is not designed for running multiple tasks in parallel as easily as cloud-based solutions.

- Installation Confusion: Initially, the downloaded version might be the older UI Tars (0.9) instead of the desired Agent Tars (1.0).

Frequently Asked Questions (FAQs)

Can Agent Tars control my operating system?

Based on the test in the source, it explicitly stated it could not control the operating system through the user interface.

Is it secure?

It runs on your local computer, unlike solutions running in a secure VM, which raises potential security considerations.

Can it run multiple tasks in parallel?

It is not designed to run multiple sessions in parallel easily, in contrast to cloud-based tools.

Is Agent Tars free?

Yes, it is open-source and free to download. The cost comes from paying for API usage for the AI models and search providers (unless free options like local browser search are used).

What AI models can I use?

The settings showed options for VLLM, Hugging Face, Deepseek, and Claude.

Why can't it comment on videos?

The headless browser it uses does not keep your login session, preventing it from performing actions that require authentication.

Final Thoughts

If you’re curious about local AI agents and want to explore something that interacts with your screen, Agent TARS is worth checking out — especially since it’s free. It may not replace tools like Manus just yet, but it’s heading in that direction.

I’ll definitely keep testing and updating you as it evolves.